Find Node.js test coverage

io.moderne.prethink.calm.FindNodeTestCoverage

Identify test methods in Jest, Mocha, and Vitest test files. Detects describe(), it(), and test() blocks and populates the TestMapping table.

Recipe source

This recipe is only available to users of Moderne.

This recipe is available under the Moderne Proprietary License.

Used by

This recipe is used as part of the following composite recipes:

Usage

This recipe has no required configuration options. Users of Moderne can run it via the Moderne CLI.

- Moderne CLI

You will need to have configured the Moderne CLI on your machine before you can run the following command.

mod run . --recipe FindNodeTestCoverage

If the recipe is not available locally, then you can install it using:

mod config recipes jar install io.moderne.recipe:rewrite-prethink:0.3.0

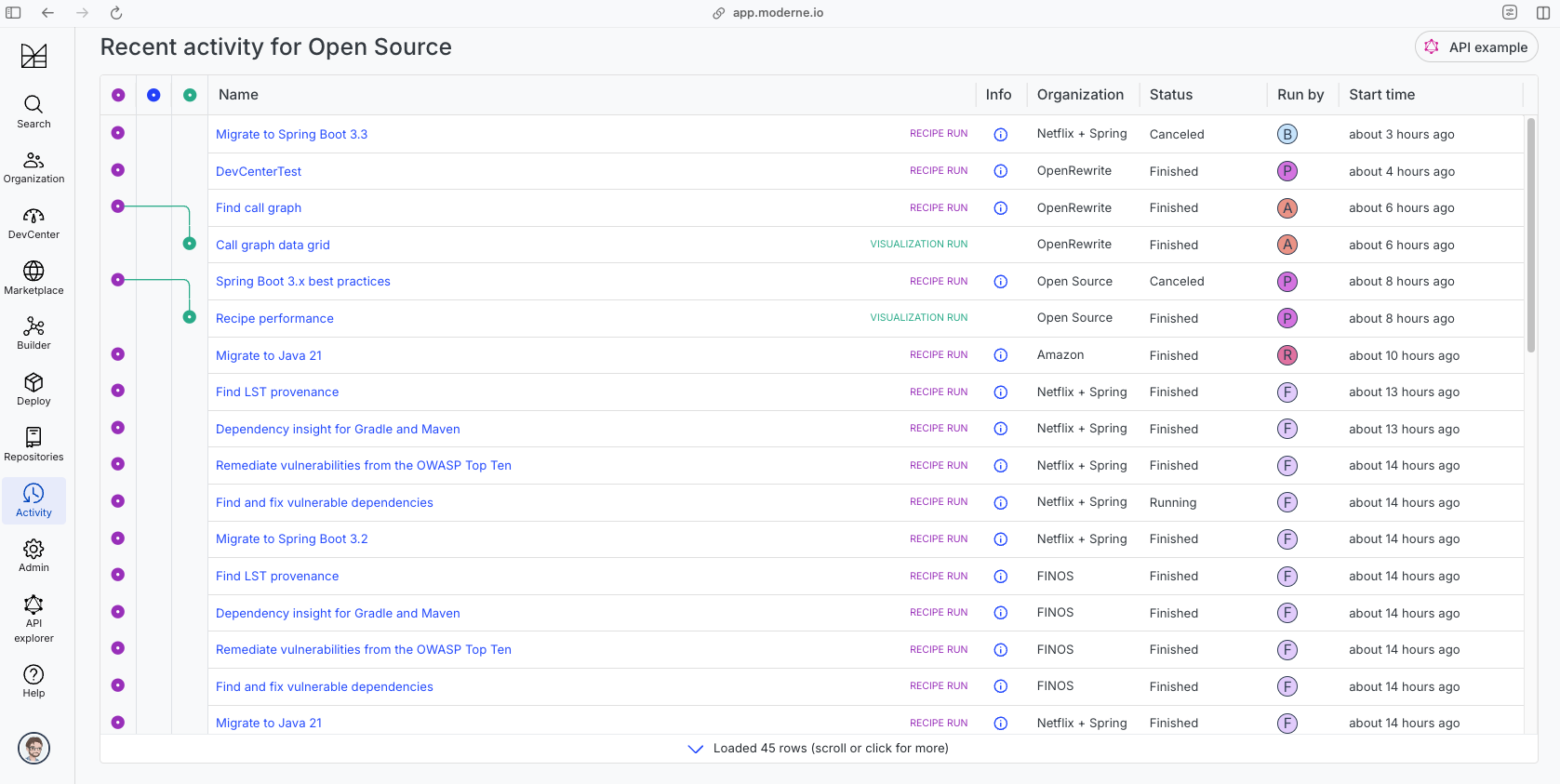

See how this recipe works across multiple open-source repositories

Run this recipe on OSS repos at scale with the Moderne SaaS.

The community edition of the Moderne platform enables you to easily run recipes across thousands of open-source repositories.

Please contact Moderne for more information about safely running the recipes on your own codebase in a private SaaS.

Data Tables

- TestMapping

- SourcesFileResults

- SearchResults

- SourcesFileErrors

- RecipeRunStats

Test mapping

io.moderne.prethink.table.TestMapping

Maps test methods to implementation methods with optional AI-generated summaries and inference metrics.

| Column Name | Description |

|---|---|

| Test source path | The path to the source file containing the test. |

| Test class | The fully qualified name of the test class. |

| Test method | The signature of the test method. |

| Implementation source path | The path to the source file containing the implementation. |

| Implementation class | The fully qualified name of the implementation class. |

| Implementation method | The signature of the implementation method being tested. |

| Test summary | AI-generated summary of what the test is verifying. |

| Test checksum | SHA-256 checksum of the test method source code for cache validation. |

| Inference time (ms) | Time taken for the LLM to generate the summary, in milliseconds. |

| Input tokens | Number of tokens in the input prompt sent to the LLM. |

| Output tokens | Number of tokens in the response generated by the LLM. |

Source files that had results

org.openrewrite.table.SourcesFileResults

Source files that were modified by the recipe run.

| Column Name | Description |

|---|---|

| Source path before the run | The source path of the file before the run. null when a source file was created during the run. |

| Source path after the run | A recipe may modify the source path. This is the path after the run. null when a source file was deleted during the run. |

| Parent of the recipe that made changes | In a hierarchical recipe, the parent of the recipe that made a change. Empty if this is the root of a hierarchy or if the recipe is not hierarchical at all. |

| Recipe that made changes | The specific recipe that made a change. |

| Estimated time saving | An estimated effort that a developer to fix manually instead of using this recipe, in unit of seconds. |

| Cycle | The recipe cycle in which the change was made. |

Source files that had search results

org.openrewrite.table.SearchResults

Search results that were found during the recipe run.

| Column Name | Description |

|---|---|

| Source path of search result before the run | The source path of the file with the search result markers present. |

| Source path of search result after run the run | A recipe may modify the source path. This is the path after the run. null when a source file was deleted during the run. |

| Result | The trimmed printed tree of the LST element that the marker is attached to. |

| Description | The content of the description of the marker. |

| Recipe that added the search marker | The specific recipe that added the Search marker. |

Source files that errored on a recipe

org.openrewrite.table.SourcesFileErrors

The details of all errors produced by a recipe run.

| Column Name | Description |

|---|---|

| Source path | The file that failed to parse. |

| Recipe that made changes | The specific recipe that made a change. |

| Stack trace | The stack trace of the failure. |

Recipe performance

org.openrewrite.table.RecipeRunStats

Statistics used in analyzing the performance of recipes.

| Column Name | Description |

|---|---|

| The recipe | The recipe whose stats are being measured both individually and cumulatively. |

| Source file count | The number of source files the recipe ran over. |

| Source file changed count | The number of source files which were changed in the recipe run. Includes files created, deleted, and edited. |

| Cumulative scanning time (ns) | The total time spent across the scanning phase of this recipe. |

| Max scanning time (ns) | The max time scanning any one source file. |

| Cumulative edit time (ns) | The total time spent across the editing phase of this recipe. |

| Max edit time (ns) | The max time editing any one source file. |